5 Common Unconscious Biases We See In Product Development

This is the second post in a series which is studying the unconscious biases found in product development, which can be seen when we are designing products or software people use, or when trying to uncover precisely what's wrong with our workplace today. The first in the series can be found here: https://www.podojo.com/unconscious-bias-the-affinity-bias/

Confirmation Bias

why we care

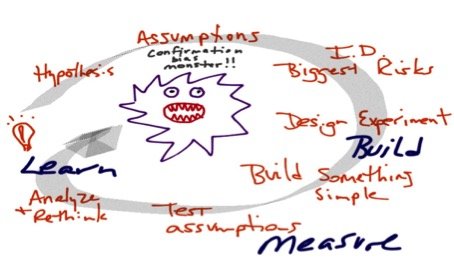

In the world of Lean Start-up, where each iteration begins with a hypothesis, with the output being the results of an experiment, beware: there is an insidious enemy awaiting.

This enemy is called the “confirmation bias.”I'm pretty sure we have all been in a situation when we ran an experiment and the results of the experiment (somehow!) ran against our expectations. What did you do next? Did you accept the results and change your original hypothesis? Or, did you assume the experiment was flawed - (I can’t be wrong!) Or, perhaps the experiment proved the hypothesis. In this case did you doubleback and validate your experiments? What happens to our product output if we continue to favor the information that confirms our beliefs?

What is Confirmation Bias?

Confirmation Bias is the proclivity to amplify evidence that confirms an existing belief, while ignoring facts that refute it. If data does not identify a pattern to support a strongly held opinion or position, Product Creators who are prone to the bias will supply one. Confirmation bias involves ignoring or rationalizing contradictory data in order to force it to fit like puzzle pieces into a predetermined framework.

Confirmation bias has a very strong influence on product development. Why? Because great product developers are passionate and opinionated people. Yet we are living in a world of uncertainty, and any confirmation at all that we are on the right path is tremendously appreciated. Putting this plainly, it hurts to be wrong, so we look for proof of our idea instead of validation.

A hypothetical example: The product manager has the best idea for the “next big app” so she directs her team to begin market research to explore feasibility. The marketing folks then conduct surveys, focus groups, and competitive analyses to confirm their beliefs about a feature idea. As a result, the questions they craft for their market research will likely be biased to give her answers that she wants to hear.

But self-delusion is perhaps the greatest hazard of product development and product managers should work hard to avoid the trap of pushing agendas instead of acting as representatives of users. This bias comes out most often when we conduct research. Instead of testing a hypothesis (the scientific thing to do - being open about the results of an experiment) we tend to select the data from our research supporting what we want to see happen. This devalues (and in some cases completely negates) the purpose of research – we want to find out what is really happening, not to support our own beliefs.

Exercise

In the 1960s, a psychologist named Peter Wason created a simple experiment which you can replicate with your team to quickly show your team how insidious this confirmation bias is. He asked for volunteers to determine a pattern that applied to a series of 3 numbers that he would provide them. The example given by him was “2-4-6”, and they were allowed to construct their own series of numbers to test their hypothesis. When they constructed their own series of 3 numbers, Wason would tell them whether it conformed to the rule or not. The actual rule was any 3 numbers which are ascending in order - simply 3 numbers counting up.

What he learned was that participants only tested rules that would confirm their hypothesis. For example, if they thought the rule was “numbers increase by two” they would only test numbers that confirmed this, example 6-8-10, and ignore those that violated it, ex 10-20-30.

When you perform this exercise with your team, have 4 columns and up to 5 rows listed to collect data.

Provide the sequence: 2, 4, 6. Then, ask the respondent to provide a new sequence, guess the rule and provide their % confidence. Based on what they provide, let them know you will respond with a “yes” or “no” if the new sequence fits the rule (of any three numbers ascending in series.) At the end of say, 4 or 5 guesses, ask them for their final guess on what the rule could be.

Respondent #1

As shown in the example below, the respondent sought solely confirmatory information regarding the rule. The person thought the rule was "counting up by two's" from the beginning, continued to test sequences of two, never making an attempt to falsify the rule with other number sequences. It’s also worthy to note here that the confidence was highest when the least amount of information was known.

Respondent’s guess of the rule: Counting up by 2: False. {If you recall the rule is simpler than that - it is “any 3 numbers counting up.”}

Respondent #2

Here’s another example from a respondent, where you can see that only when we consistently attempt to falsify the rule, can we end up with the correct answer.

What you can do

Confirmation bias is a problem of the ego. And we’re all egotistical at some point. If you can’t stand to be wrong; you’re going to fall prey to this over and over and over again. Learn to value truth rather than “correctness” and you’ll be heading in the right direction.

Seek out disagreement. If you’re wrong, this will help you identify and actually accelerate your learning. Teach your whole team how to play devil’s advocate to hunt for the data that proves the hypothesis invalid. If wrong, don’t worry: writer Allen Ginsberg once famously said that you should never get so attached to your work that you can’t let it go; you should be able to “kill your darlings.”

Adopt objective and rigorous experimental methodology that captures true sentiment of your users.

Find those that are not afraid to tell you the truth, and ask insightful questions: such as “how can this experiment be improved.” For example, instead of asking, “Do you think this feature is a good idea for this product? Would you be interested?” Instead, ask your users to rank features of products in the form of conjoint analysis to discover their preferences.

Get out of the building and look for people who disagree with you and examine the evidence they present.

Read all of the viewpoints, not just the viewpoints you like.

In short, we don’t like having our opinions contradicted. This is why the scientific method of falsifiability is so powerful, forcing us to construct hypotheses that are falsifiable. If not we would tend to only create positive tests to confirm what we think we know.

Our next post in a series of unconscious biases we see in Product Development is on the Ikea effect.